I Wrote My Dream App in 4 Hours

Using Copilot Agent Mode, I was able to implement an app idea that I’ve been mulling for years.

Since the release of GPT 3.5 (almost 3 years ago!) I’ve had a few app ideas that I’ve used as a benchmark for how good LLMs are at coding. It’s a list of projects that I test each major model and tool breakthroughs against, and if I can build one of these projects, it represents a true advancement in the field. With GitHub Copilot Agent Mode, I’ve finally been able to build the first app on my list, Melticulous.

My entire family is obsessed with Perler Beads (generic name: fuse beads.) It’s a deceptively simple craft where you arrange small beads on a grid of pegs and then fuse them together with a hot iron. You can create really elaborate designs with them, and they’re really good for making pixel art.

I‘ve long wanted to create an app that turns arbitrary images into Perler bead patterns. I think it would be really neat to be able to make hangable art based on photos of your kids, or landscapes you took on your dream vacation.

Proof of Concept

I’ve been trying to build this app on every LLM model since 2022, with a slow progression of results, but I would always get stuck on the color quantization algorithm. That is - the algorithm for choosing which colors should be part of the palette. I wanted to limit the number of colors to 16, because who (besides my wife) has a warehouse full of beads? But many images have millions of different colors and I know nothing about the math involved with reducing the number of colors in an image. This is what I needed LLMs for; somehow magically figuring out the math involved in quantizing the colors in a way that felt correct to the user. With every model, I was hitting a brick wall at this stage.

That is, until VSCode (GitHub Copilot) added agent mode and increased their context windows back in April. I tried prompting it on a whim using the free mode with GPT-4o and to my surprise, it only took a few tweaks to get a working proof of concept! Here's my experience taking it from PoC to a solid, usable app, with some tips along the way.

How does it work? Just ask!

I showed a few people the PoC and got some feedback, but the biggest question software developers asked was "How does it work?" I knew that the key breakthrough was to ask it to choose the most unalike colors to be part of the palette, but I didn't know how it made that choice. So I just asked!

generate a document for me that gives me enough information about the app to discuss it with other devs. it should include enough detail about the tech decisions made to be able to discuss in technical company. take note of interesting algorithms like the min-max color quantization and explain how they work to someone that knows math but has forgotten a lot of it :)

It built me a document that explained everything in simple language, including a section on color quantization:

Min-Max (Max-Min) Color Quantization

To reduce the number of bead colors while preserving image diversity, the app uses a greedy max-min algorithm:

- Start with the most common color in the image as the first palette entry.

- Iteratively add the color that is farthest (in Euclidean RGB space) from all colors already in the palette.

- Repeat until the palette reaches the desired size (e.g., 16 colors) or all unique colors are included.

- Pad with white if needed.

This ensures the palette covers the full range of colors in the image, not just the most frequent ones, and avoids clustering similar colors.

Agents Can’t See

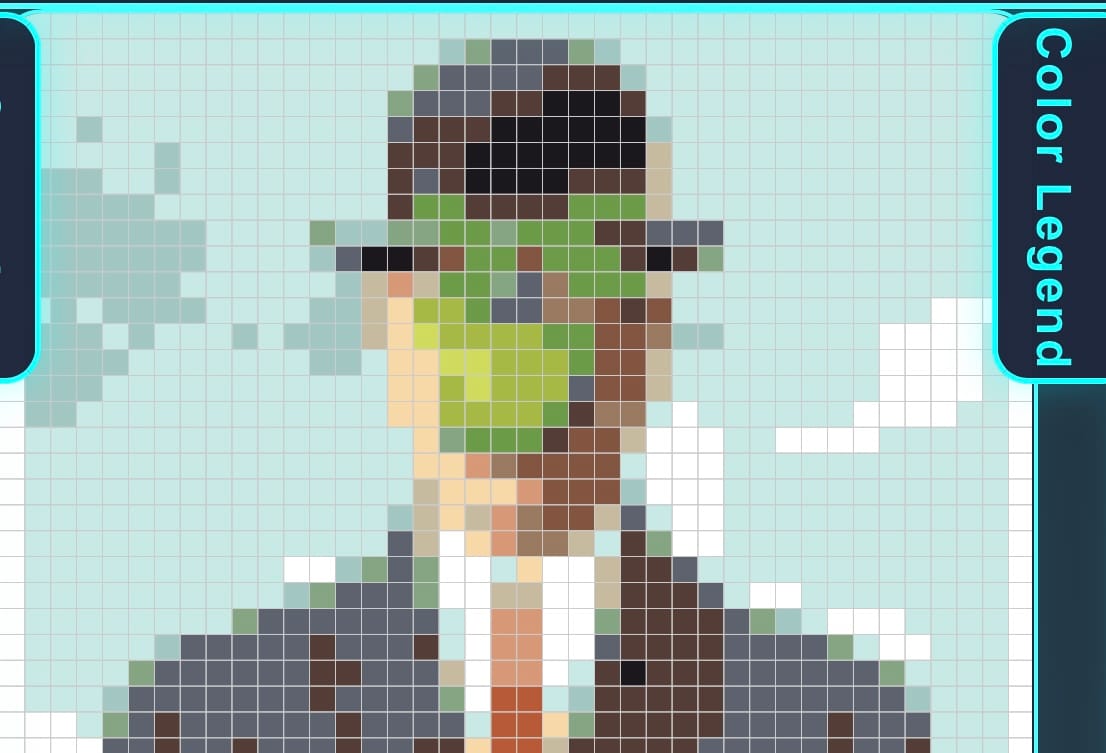

In order for the app to be useful, it needed to generate very precise patterns that fit under the plastic fuse-bead peg boards exactly. If the grid measurements are off by even a millimeter, it can mess up the whole image. I spent a while trying to iterate on the layout with Copilot, but ultimately I had to physically print out the results and hold them up to a peg board to see if it was printing in alignment.

My first try was way off, even though the measurements were to the correct fuse bead pattern specifications. I needed to iterate, but I realized that printing out a new sheet every time I made a small tweak would have been a huge waste of time and paper, so I had to find another way to test the alignment.

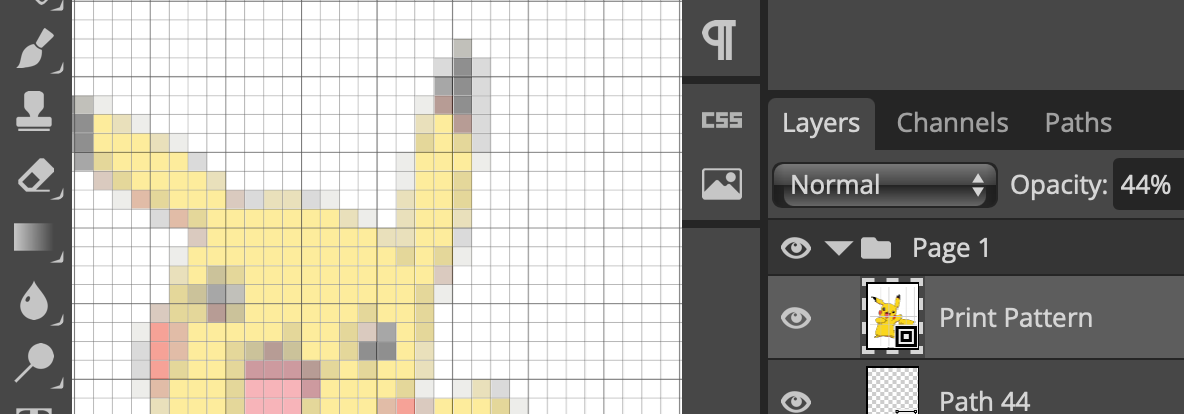

Luckily, there are tons of free printable fuse bead patterns online, so in order to streamline the process, I downloaded a free PDF and measured all of the allowances in Adobe Acrobat. I fed all of that into Copilot and then took the output and overlayed it on the printable pattern I had downloaded using the excellent Photoshop clone, Photopea. This gave me a way tighter (and less wasteful) feedback loop and I was able to get a very precise output. After the overlay was exact, I printed out a physical copy to verify it aligned with a real peg board.

Sometimes it's Smarter Than You

It was around this part of the process that I realized that Copilot had done something smart that I hadn't thought of. When I was conceptualizing this app, I assumed it would need a backend API because processing the image would take a long time. But the LLM built all of the processing into the browser, and the UI was actually really snappy. This design simplified a lot of things from my end: I don't have to maintain a compute infrastructure and manage access. This can be hosted directly on a CDN for fast download, and performance is limited only by the user’s own device.

But the biggest advantage is that because Melticulous runs in the browser, it's completely secure and users don't have to worry about me storing their files, EVER.

The 90/90 Rule

All of these early positive results were really encouraging, but the devil is in the details and the 90/90 rule started to set in.

The first 90 percent of the code accounts for the first 90 percent of the development time. The remaining 10 percent of the code accounts for the other 90 percent of the development time.

I'm not sure that I can fully pin this on Copilot, as this rule has been around for a long time, but there was a fair amount of whack-a-mole at this stage. I would try to add some UI Calls-to-Action and the grid would be misaligned. Or, I would try to change the wording of something and the buttons would look weird.

Use git and Commit Often

I have long had a policy of not writing a stitch of code on any project without doing git init first. It's an excellent habit, and it saved me more than a few times while building this project.

I was at the point where the app was looking really polished and I decided I needed to add an about page. I had Copilot generate the page and was making some tweaks to the wording when Copilot went rogue and replaced the entire app with a large spinning logo. I loaded up the page after a "wording edit" and there was no app anymore, it had reached into the folder and grabbed an image that I wasn't using for anything and made it huge and rotating.

Then Copilot acted like everything was fine. I tried to argue a bit, and I even tried stepping backward using the built-in functionality, but the app was destroyed at that point. I reverted to a known good version in git and tried again. Don't even think about vibe coding without git. You need it. I'm serious.

Test What's Important to You

By the same token, make sure to add unit tests for all of the core functionality that's important to you. To me, the cell alignment of the printed pattern was the most important immutable aspect of this application. So I had Copilot generate a unit test and tested the unit test by purposely screwing up the alignment, forcing the test to fail.

Unit tests alone aren't enough unless you run them regularly. I asked Copilot to add a step in the GitLab Pipeline to run my unit tests before any merge to main.

Sometimes, It's Just Not The Agent’s Day

The last bit of caution I'd like to leave people with is that sometimes Copilot, like any developer, gets stuck trying to solve a problem a certain way. You need to be on top of this and realize when it's stuck in a loop trying to do something that will never work. In this situation, you have two options, which you can employ separately or together:

- Start the context over: ask Copilot to summarize the conversation and the work that it's done and store it to a file. Then erase your history and re-describe the problem. Sometimes restating the problem a different way to a brand new instance is enough to nudge Copilot in the right direction.

- Switch models: This may not be a popular opinion, but most of the modern models are almost the same in terms of their abilities. Some really thoughtful tasks require a reasoning model, but other than that, they're pretty interchangeable. So interchange them - don't tie yourself to a given model, think of changing models as a way to change perspective.

Conclusion

If you're a software developer, you need to be learning these tools. At this point, it's not optional. Agents aren't going to replace human software engineers, but human engineers that aren't using these tools run the risk of being replaced by human engineers that do.

If you’re into crafting with Perler Beads, don’t forget to take Melticulous for a spin; and if you have any enhancement ideas, let me know!